Technology: Recommender Systems

Cristina Marco is a Postdoctoral Fellow within content analysis in news recommender systems at NTNU Trondheim. During the London seminar, she was part of the Information Systems work group. In her individual presentation, Cristina Marco talked about the RecTech project at NTNU, a project focused on a recommendation technology meant to filter and recommend news online.

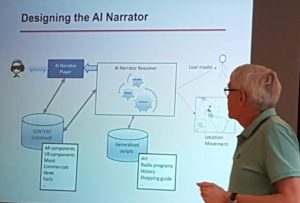

Marco asked: “Which type of information should the AI Narrator provide? Do we want it to provide recommendations or not?” Most of the seminar participants seemed to be in favor of recommendations. Because, as Marco mentioned in her presentation: “The Internet provides large amounts of information. People are overloaded with tons of it.” The AI Narrator will need some kind of filtering, so the user won’t be bombarded with irrelevant information.

Jon Hoem is Associate Professor of Digital Media at the Western Norway University of Applied Sciences. He was part of the Narratives work group. “How do we get surprise instead of mere recommendations?” asked Hoem. He thinks a central element to the recommendations made by the AI Narrator should be surprise; that it should present the user with things they didn’t know about.

I agree with Hoem; I think the AI Narrator should provide surprising recommendations. Then we might learn about new things, instead of things similar to what we’ve searched for before; i.e. not just “more of the same”, like recommendations on YouTube. I hope the next seminar will bring suggestions for technological solutions, to make these surprises happen.

During the London seminar, I was part of the Narratives work group. We talked a lot about the concept of the AI Narrator as a guide when travelling locally and internationally; that it might be able to provide us with relevant news stories or stories about local historic sites, and recommendations for places to go, things to eat, etcetera. This might be particularly useful for recommending small, local businesses that might not have a website, or a website in a language the user doesn’t speak.

The group discussed, for instance, the inconvenience of coming home from a trip, only to discover that the area in question had shops, museums or restaurants that you would have liked to visit. Perhaps the AI Narrator could make it easier to discover these things while still on the trip.

The Interfaces work group asked what makes the AI Narrator different from, for example, Google Assistant. However, they suggested we still should make the AI Narrator, despite it having similarities with other services, because we can address and focus on different issues. The general consensus seemed to be: Commercial actors will go into similar projects anyway, so it’s important that academics also get experience with the possibilities and potential downsides of a project like this.